IOCREST PCIe 3.0 x2 to 4x NVMe Expander Review

Building an all-flash NAS has always been a dream for enthusiasts, and the IOCREST PCIe 3.0 x2 to 4x NVMe Expander might be the solution to one of the biggest challenges: PCIe lane limitations. The best part? It costs only $30.

Foreword

Building an all-flash NAS has been a dream of many enthusiasts, including myself. The allure is clear: power-efficient storage, lightning-fast access times, and silent operation even under full load. However, implementing such a system comes with significant challenges, particularly around PCIe connectivity and storage expansion.

Let's examine a typical build scenario:

Modern Intel platforms, particularly 12th Gen and newer, make compelling choices with their built-in iGPUs that support SRIOV and VM pass-through. The B660 platform with DDR4 offers an attractive price-to-performance ratio. However, most B660 boards only provide two to three NVMe slots, and the platform lacks PCIe bifurcation support. Even on Z690 boards that support bifurcation, the x16 slot can only split to x8/x8 - overkill for NVMe drives that use just four lanes each. Add a 10G network card like the Intel X520 or X710 (which need x4 or x8 lanes), and you're quickly running out of PCIe connectivity.

With three 4TB NVMe drives, you'd have 12TB of blazing-fast storage. But here's the crucial question: in a NAS environment where access is primarily through 10G ethernet (maximum theoretical throughput of 1250MB/s), do we really need the full sequential speed potential of each drive?

This review examines an intriguing solution: the IOCREST PCIe 3.0 x2 to 4x NVMe expander. It's a device that trades some raw speed for significantly increased storage density - a compelling proposition for NAS builds.

IOCREST PCIe 3.0x2 to 4x NVMe Expander Key Features

- Platform PCIe Bifurcation support not required

- PCIe 3.0 x2 interface expanding to four NVMe M.2 slots

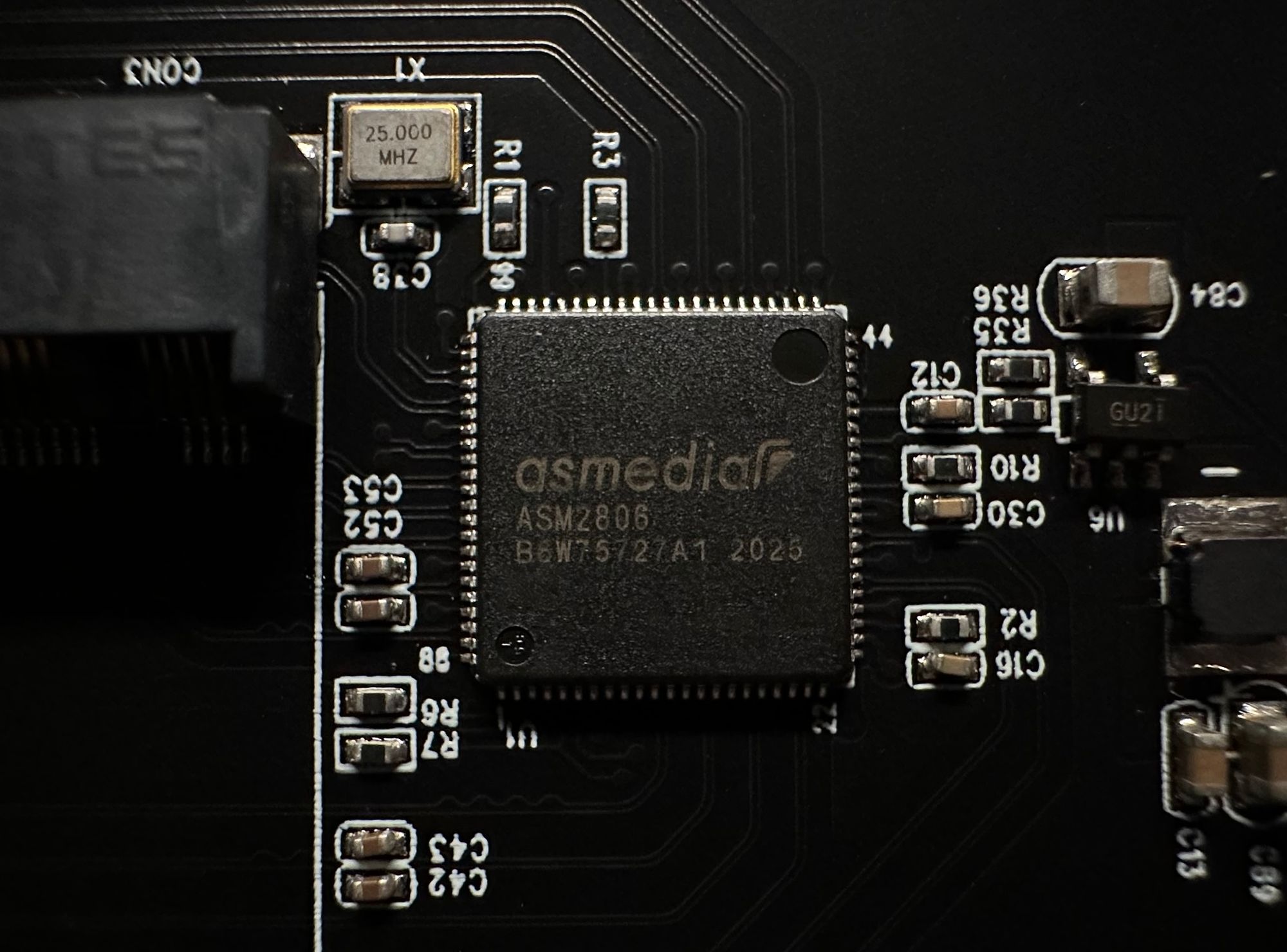

- Powered by Asmedia ASM2806 PCIe packet switch

- ASPM L0s/L1/L2-3/L3 power saving modes support

- Compatible with both PCIe 3.0 and PCIe 4.0 NVMe drives

A PCIe Expander

At the heart of this IOCREST expander lies the Asmedia ASM2806 PCIe switch, an intelligent piece of silicon that enables four PCIe lanes from just two root lanes. Think of it as a smart traffic controller: it takes two PCIe 3.0 lanes from your system and dynamically manages four downstream lanes for NVMe drives. This means each pair of drives effectively shares a single PCIe 3.0 lane's worth of bandwidth, with the ASM2806 handling the switching between them.

The ASM2806 belongs to Asmedia's family of PCIe packet switches, which includes various models handling different lane configurations from x8 down to x1. What makes this particular chip interesting for NAS applications is its support for ASPM L0s/L1/L2-3/L3 power saving modes, allowing connected SSDs to drop to power states as low as 5mW during idle periods.

The board's design is elegantly simple: a PCIe x4 connector (electrically wired as x2) feeds into the ASM2806 chip, which then distributes the lanes to four M.2 slots. While the board accepts PCIe 4.0 SSDs, they'll operate in PCIe 3.0 mode due to the switch's specifications. The total theoretical bandwidth cap is 1.97 GB/s - still comfortably above 10G ethernet's 1.25 GB/s limitation.

Installation

The installation process is very simple, just plug the SSDs into the M.2 slots, and secure them with M.2 screws, which IOCREST included six of them in the kit. Then, use a PCIe x4 slot for the board. If you don’t have an open x4 slot, you can even use a x1 slot, but the bandwidth will be halved to PCIe 3.0 x1.

Testing

Test Platform

Motherboard: AsRock B650M PG Riptide

RAM: G.Skill Flare-X DDR5 5600C36, 32GB

OS SSD: Kingston KC3000, PCIe 4.0, 1TB

Storage SSD: Nextorage NEM-PAB, PCIe 4.0, 1TB

Benchmark Software: Crystal Disk Mark 8.0.4

Test SSD Group

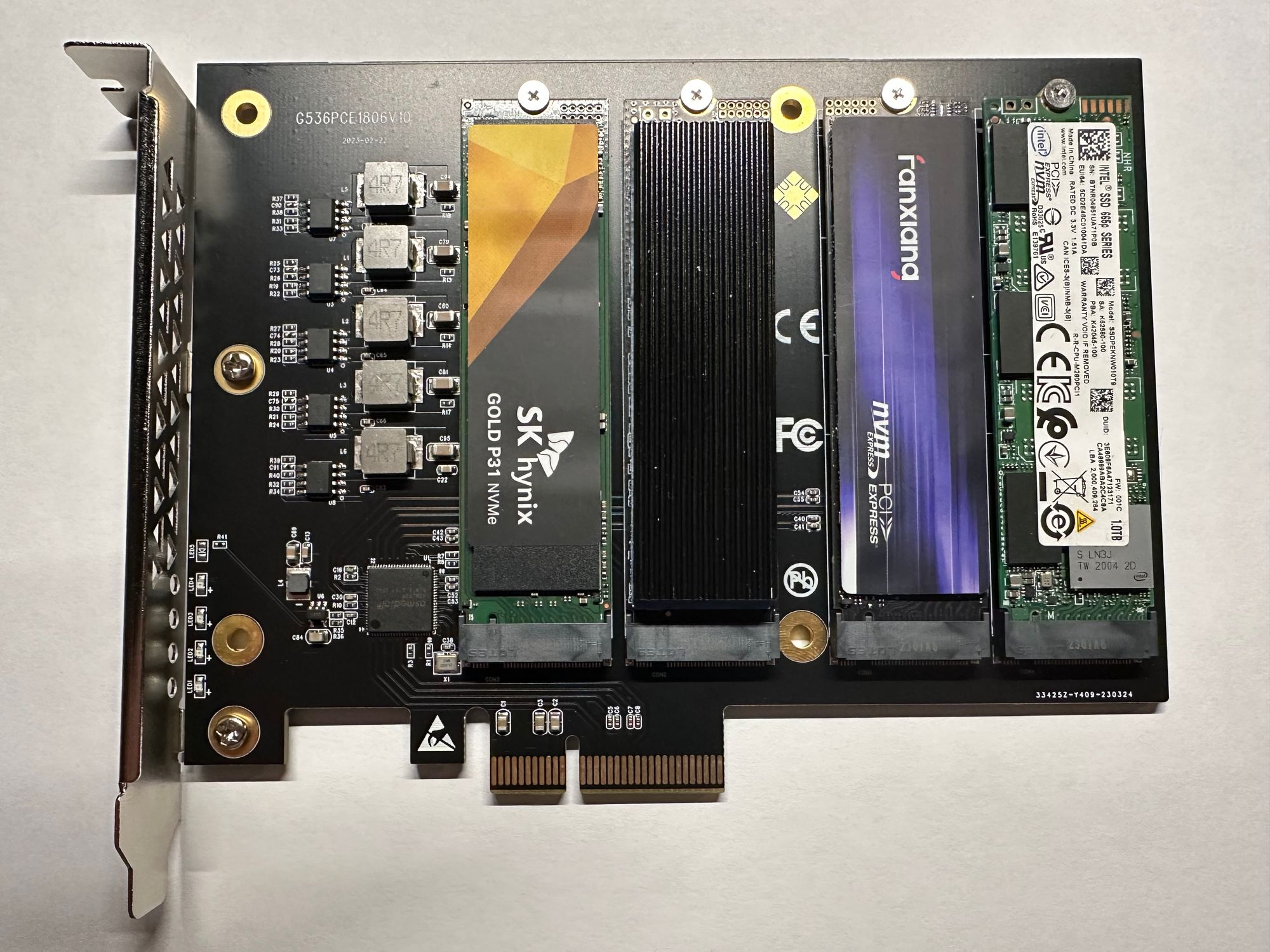

SSD A: Hynix P31 Gold, PCIe 3.0, 1TB, High-end, TLC with DRAM

SSD B: Netac, PCIe 3.0, 512GB, Budget, QLC and DRAM-less

SSD C: Fanxiang S880, PCIe 4.0, 2TB, Mid-range, TLC with DRAM

SSD D: Intel 660p, PCIe 3.0, 1TB, Mid-range, QLC with DRAM

Testing Protocol

Our testing sequence progresses through several stages:

- Baseline Performance

Single drive on native motherboard M.2 slot

Establishes performance reference point - Single Drive on Expander

Isolates impact of PCIe switching overhead - Multi-Drive Concurrent Testing

All slots populated

Simultaneous benchmarking to stress switching capability - Real-World File Operations

Windows file copy between drives

Represents typical NAS workloads - RAID Configuration

Windows Storage Spaces striped volume

Tests maximum achievable bandwidth

For each stage, we use two Crystal Disk Mark presets:

Peak Performance: Tests maximum theoretical performance (Q8T1, Q32T1)

Real World: Simulates typical usage patterns (Q1T1)

Validation

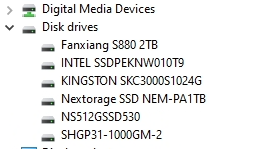

All installed drives showed up in WIndows Device Manager without a hitch, which was expected.

Baseline Test

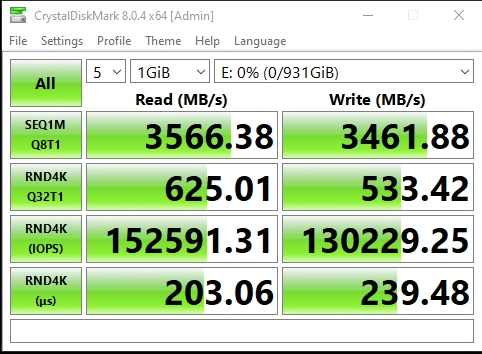

In this test, we used a single Hynix P31 Gold connected to the second M.2 NVMe slot on the motherboard, which is provided by the B650 chipset.

| Test | Read | Write |

|---|---|---|

| Sequential, 1M, Q8T1 | 3566 (MB/s) | 3462 (MB/s) |

| Random, 4K, Q32T1 | 625 (MB/s) | 533 (MB/s) |

| Random, 4K, IOPS | 152591 | 130229 |

| Random, 4K, Latency | 203 ms | 239 ms |

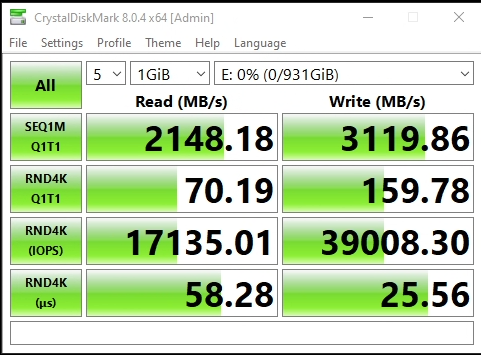

| Test | Read | Write |

|---|---|---|

| Sequential, 1M, Q1T1 | 2148 (MB/s) | 3120 (MB/s) |

| Random, 4K, Q1T1 | 70 (MB/s) | 160 (MB/s) |

| Random, 4K, IOPS | 17135 | 39008 |

| Random, 4K, Latency | 58 ms | 26 ms |

These baseline results establish our performance reference point. The Hynix P31 Gold shows typical PCIe 3.0 x4 performance with sequential speeds above 3.5 GB/s and strong 4K random performance. The real-world preset results demonstrate the drive's everyday performance characteristics, which will be important for comparing against the expander's impact.

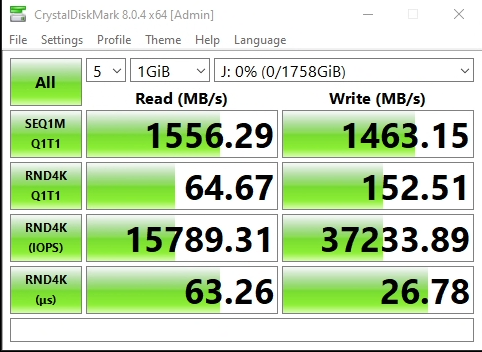

With Expander, Baseline Test

This time, we installed the Hynix P31 Gold onto the expander, and used the PCIe x4 slot on the motherboard, which is also provided by the B650 chipset.

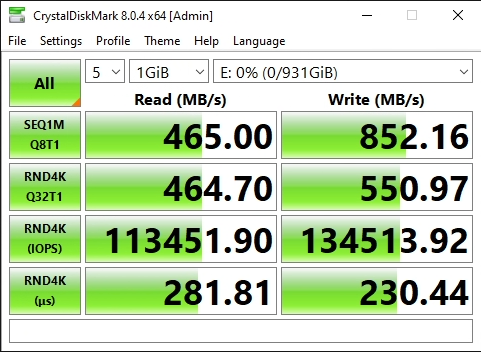

| Test | Read | Write |

|---|---|---|

| Sequential, 1M, Q8T1 | 465 (MB/s) | 852 (MB/s) |

| Random, 4K, Q32T1 | 464 (MB/s) | 551 (MB/s) |

| Random, 4K, IOPS | 113452 | 134514 |

| Random, 4K, Latency | 282 ms | 230 ms |

| Test | Read | Write |

|---|---|---|

| Sequential, 1M, Q1T1 | 453 (MB/s) | 832 (MB/s) |

| Random, 4K, Q1T1 | 67 (MB/s) | 155 (MB/s) |

| Random, 4K, IOPS | 16458 | 37918 |

| Random, 4K, Latency | 60 ms | 26 ms |

These results clearly demonstrate the bandwidth limitation of the expander. While sequential speeds dropped to roughly one-fourth of native performance as expected, the real-world random I/O performance remained surprisingly strong. The minimal 2ms latency increase suggests the ASM2806 switch adds negligible overhead for typical storage operations. For NAS usage scenarios where sequential performance isn't the primary concern, these results are quite promising.

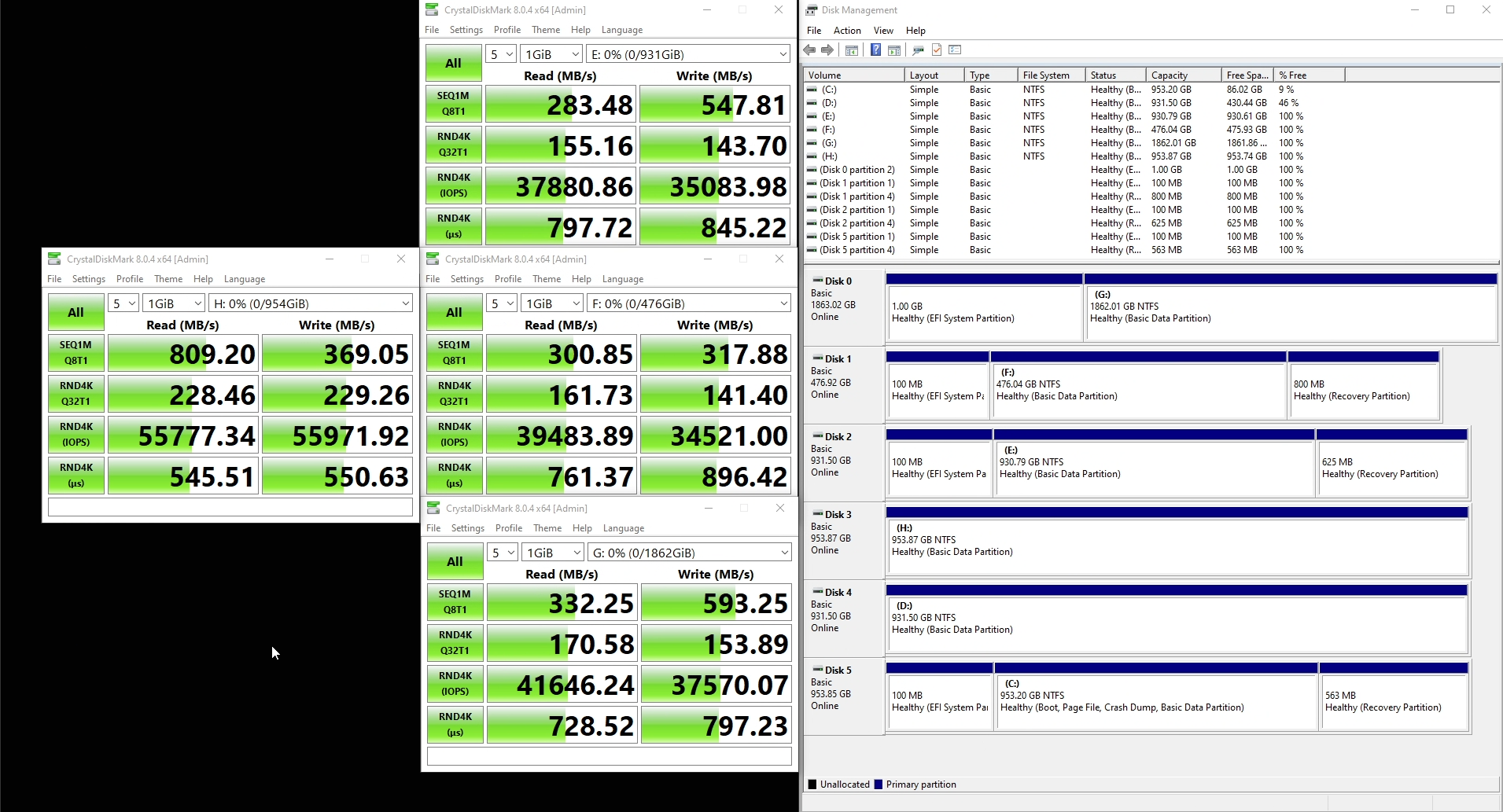

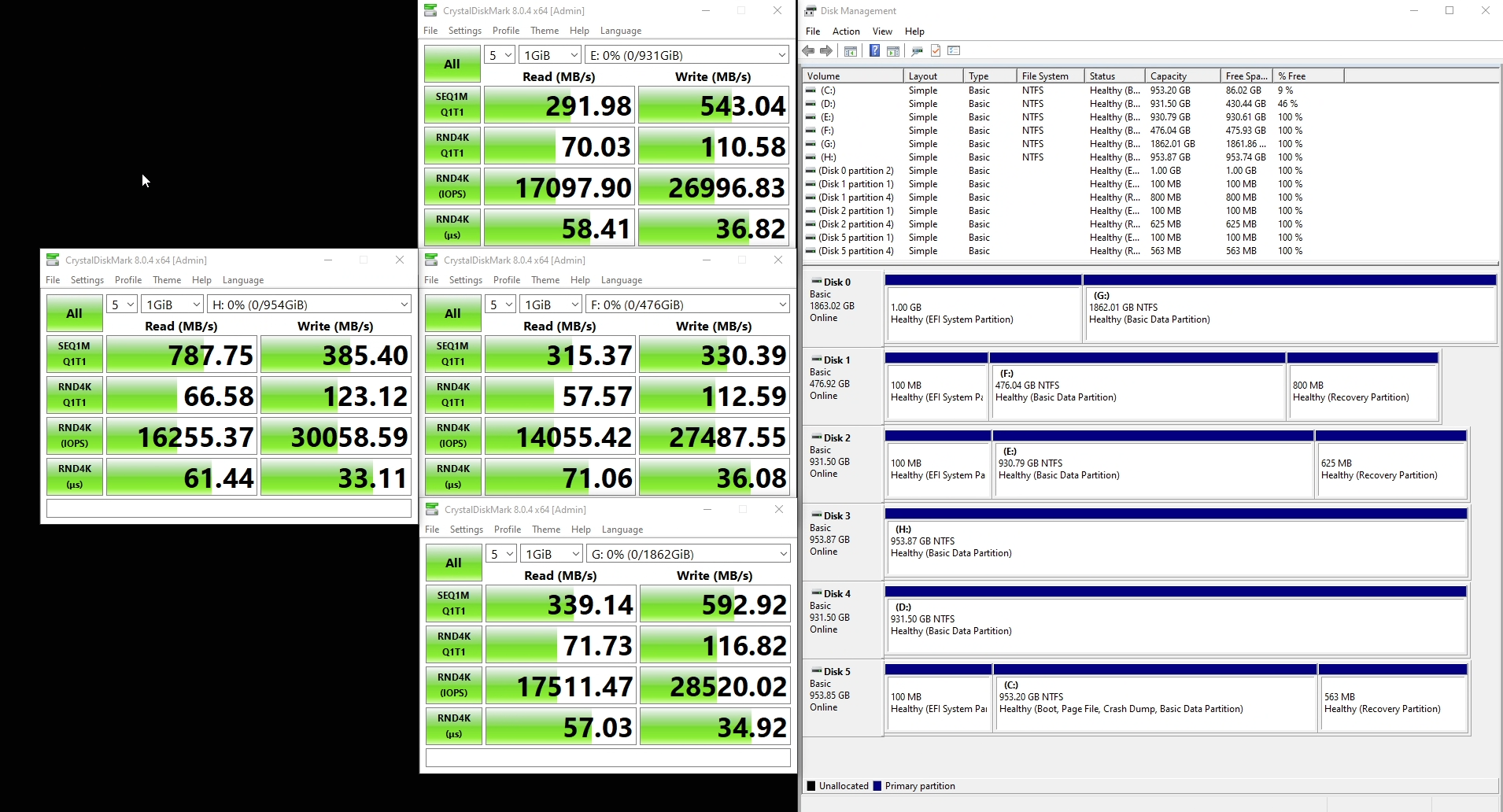

With Expander, All Drives Test

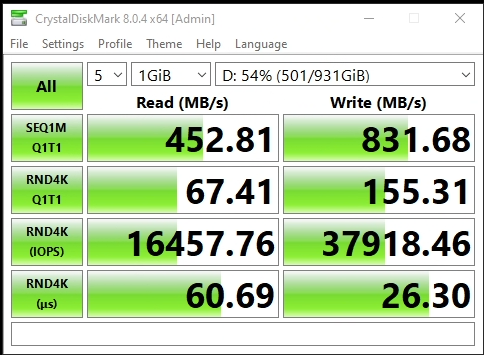

| SSD Model | Read | Write |

|---|---|---|

| Netac 512GB | 301 (MB/s) | 318 (MB/s) |

| Fanxiang S880 2TB | 332 (MB/s) | 593 (MB/s) |

| Hynix P31 Gold 1TB | 283 (MB/s) | 548 (MB/s) |

| Intel 660p 1TB | 809 (MB/s) | 369 (MB/s) |

| SSD Model | Read | Write |

|---|---|---|

| Netac 512GB | 162 (MB/s) | 141 (MB/s) |

| Fanxiang S880 2TB | 170 (MB/s) | 154 (MB/s) |

| Hynix P31 Gold 1TB | 155 (MB/s) | 144 (MB/s) |

| Intel 660p 1TB | 228 (MB/s) | 229 (MB/s) |

The multi-drive testing reveals interesting performance characteristics of the expander. Despite sharing limited PCIe bandwidth, each drive maintains consistent performance levels appropriate to its class. The Intel 660p's surprisingly strong showing, particularly in sequential reads, likely stems from its efficient QLC controller design optimized for limited PCIe lanes. The minimal variation in random 4K performance across drives suggests the ASM2806 switch handles small, bursty I/O operations effectively.

| SSD Model | Read | Write |

|---|---|---|

| Netac 512GB | 315 (MB/s) | 330 (MB/s) |

| Fanxiang S880 2TB | 339 (MB/s) | 593 (MB/s) |

| Hynix P31 Gold 1TB | 292 (MB/s) | 543 (MB/s) |

| Intel 660p 1TB | 788 (MB/s) | 385 (MB/s) |

| SSD Model | Read | Write |

|---|---|---|

| Netac 512GB | 58 (MB/s) | 113 (MB/s) |

| Fanxiang S880 2TB | 72 (MB/s) | 117 (MB/s) |

| Hynix P31 Gold 1TB | 70 (MB/s) | 111 (MB/s) |

| Intel 660p 1TB | 67 (MB/s) | 123 (MB/s) |

Under real-world conditions with typical queue depths, the differences between drive models become less pronounced. This indicates that for everyday NAS operations, where queue depths rarely exceed 1 or 2, the choice of SSD becomes less critical. Even the budget-oriented Netac drive provides acceptable performance for typical file serving workloads.

| SSD Model | Read (ms) | Write (ms) |

|---|---|---|

| Netac 512GB | 71 | 36 |

| Fanxiang S880 2TB | 57 | 35 |

| Hynix P31 Gold 1TB | 58 | 37 |

| Intel 660p 1TB | 61 | 33 |

Latency results are particularly encouraging for NAS applications. The consistent sub-60ms read latencies and sub-40ms write latencies across most drives indicate that the expander's switching mechanism handles concurrent access efficiently. The minimal variance between drives suggests the ASM2806 provides fair access allocation regardless of the drive's native capabilities.

With Expander, All Drives, Windows Copy

| From -> To | Speed |

|---|---|

| MB NVMe - Hynix P31 Gold | 718 MB/s |

| Hynix - Netac | 783 MB/s |

| Netac - Fanxiang | 781 MB/s |

| Fanxiang - Intel | 662 MB/s |

The Windows copy tests demonstrate real-world transfer scenarios that users are likely to encounter. The consistent ~700-800 MB/s transfer speeds between drives show that the expander can efficiently manage simultaneous read and write operations through its switching fabric. These speeds are well within the requirements for 10GbE network operations, making the expander suitable for high-speed NAS implementations.

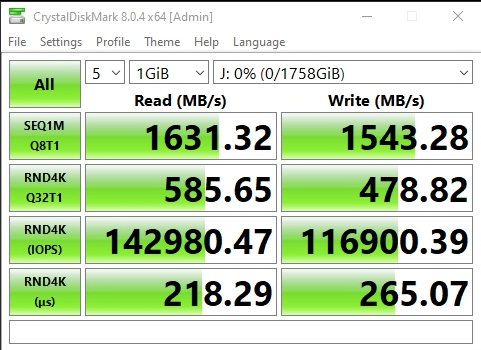

With Expander, All Drives, RAID 0 (Windows Striped Volume)

| Test | Read | Write |

|---|---|---|

| Sequential, 1M, Q8T1 | 1631 (MB/s) | 1543 (MB/s) |

| Random, 4K, Q32T1 | 586 (MB/s) | 479 (MB/s) |

| Random, 4K, IOPS | 142980 | 116900 |

| Random, 4K, Latency | 218 ms | 265 ms |

| Test | Read | Write |

|---|---|---|

| Sequential, 1M, Q1T1 | 1556 (MB/s) | 1463 (MB/s) |

| Random, 4K, Q1T1 | 65 (MB/s) | 153 (MB/s) |

| Random, 4K, IOPS | 15789 | 37234 |

| Random, 4K, Latency | 63 ms | 27 ms |

The RAID-0 configuration demonstrates the maximum potential of the expander when all drives work in concert. While the ~1.6 GB/s sequential speeds fall short of native NVMe performance, they still exceed typical 10GbE network bandwidth requirements. The random I/O results suggest that striping provides minimal benefit for typical workloads, reinforcing that more sophisticated storage solutions like Windows Storage Spaces might be more appropriate for production environments.

Pros and Cons

Pros:

- Enables four NVMe drives in a single PCIe slot

- Sufficient performance for 10GbE networks

- Excellent power efficiency with ASPM support

- Compatible with both PCIe 3.0 and 4.0 drives

- Simple installation process

- No additional power connection required

- Low price at $30 USD

Cons:

- Limited to PCIe 3.0 x1 bandwidth per drive

- Not suitable for applications requiring maximum NVMe speeds

- Full Height, which limits use cases in smaller enclosures like the Minisforum MS-01 and 1U servers.

Conclusion

The IOCREST PCIe 3.0 x2 to 4x NVMe expander proves to be an ingenious solution for users looking to maximize NVMe storage capacity in systems with limited PCIe lanes. While it does impose bandwidth limitations - reducing each drive to PCIe 3.0 x1 speeds - our testing reveals this tradeoff is quite reasonable for many NAS applications.

The key findings from our testing:

- Sequential speeds remain well above 10GbE network limitations (1.25 GB/s)

- Random I/O performance, especially at typical queue depths (Q1T1), remains strong

- Latency overhead from the ASM2806 switch is minimal, adding only 2-3ms

- RAID 0 configuration achieves ~1.5 GB/s reads and writes, demonstrating efficient use of the available PCIe bandwidth

- All tested drives, from budget to high-end models, worked reliably with the expander

For users building an all-flash NAS or expanding storage capacity where maximum sequential speeds aren't critical, this expander offers an excellent value proposition. It enables fitting four NVMe drives in a single PCIe slot while maintaining more than enough performance for 10GbE networking. The added benefit of ASPM power saving modes makes it particularly suitable for 24/7 NAS operations.

You can find this expander on AliExpress for $30 US dollars. I'm sharing this link purely for reference - I'm not affiliated with AliExpress and receive no compensation from purchases.